As software engineers, we build applications that eventually run on one or more servers, which consume electricity. Electricity is commonly generated by fossil fuels (coal, oil, gas); when burned, they produce carbon dioxide (CO2), which is the main driver of climate change.

Clearly, our software applications have an environmental impact based on the resources (CPU, storage, number of servers, etc) that they consume. What can we do to reduce the environmental footprint of our software applications? This article provides guidelines that will help you reduce or even eliminate the emissions of carbon dioxide (CO2) associated with your software applications, a process that is also known as decarbonization.

Adopting a resource-efficient high-availability model for your application

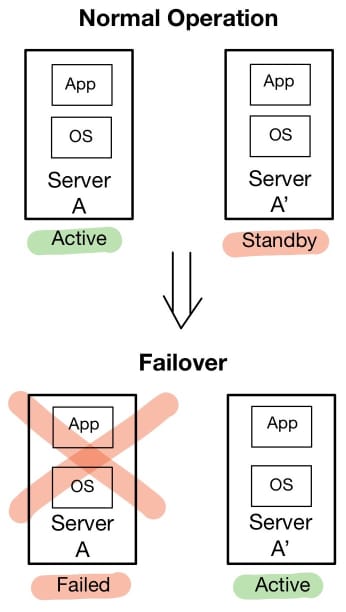

When failures happen to our applications, for example, a hardware fault in a server, we don’t want our end-users to notice this failure, but rather wish to continue doing business as usual. For this reason, we usually have one or more redundant servers that are in “standby” mode, doing nothing other than waiting for a failure to occur to an active server so that they can take over. This is illustrated in the following diagram: upon the failure of Server A, a failover is performed to the previously idle Server A’, which assumes responsibility to fulfill both existing and new user requests.

An enterprise software application typically consists of several servers, with up to 50% of them being redundant, essentially wasting resources while waiting for a failure to happen. Is there an alternative high-availability model that does not involve any redundant servers?

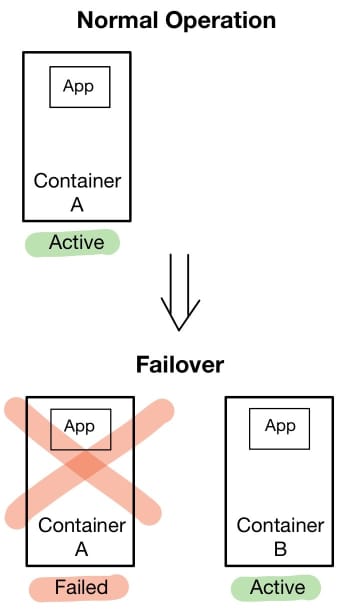

With the advent of cloud and containers, there is a resource-efficient high-availability model that our applications can adopt, hence contributing to a smaller environmental footprint.

One of the main characteristics of containers (Docker ones or alike) is that they have a size in the range of several megabytes, being orders of magnitude smaller than Virtual Machines, which have a size in the range of several gigabytes. Similarly, these lightweight containers have a startup time in the range of seconds (or even less) compared to Virtual Machines with a startup time of a few minutes.

The established practice of using redundant servers is mostly due to the time it takes to spin up a new server instance (i.e. a new Virtual Machine) when an active server fails; if users had to wait for several minutes for a new Virtual Machine to start, then the failure would be noticeable. But, if this application were containerized, then a new container instance of the application could be started in a fraction of time, hence allowing for a seamless failover that would be unnoticeable by users.

As illustrated in the above diagram, containerizing your application enables you to leverage a high-availability model that frees you from running redundant instances of your application, by quickly spinning up a new container as soon as an existing container instance of your application fails. Public cloud providers allow us to run containers without worrying about the underlying infrastructure, not having for example to provision or maintain any Virtual Machines for these containers to run on. Examples of such services are AWS Fargate, Azure Container Instances, and Google Cloud Run.

Concluding, by containerizing your application and running it on public cloud providers (using one of the above services), you can adopt a high-availability model that reduces the resources consumed by your application, hence reducing both the cloud bills and the environmental footprint of your application.

Rearchitecting applications that are mostly idle, waiting for an event to be processed

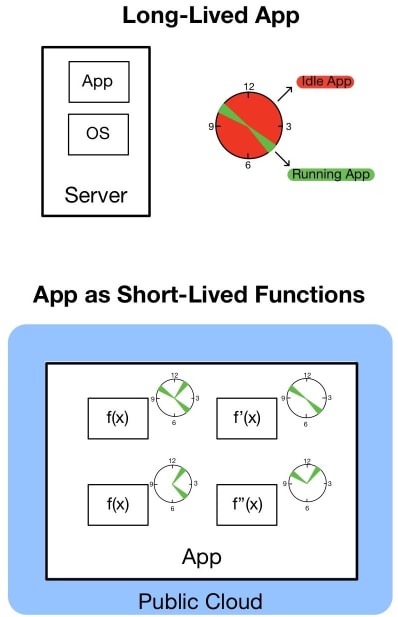

If you have applications that are idle most of the time, waiting for an event to happen, so they can start processing something related to this event, then this is a sign that you could have your software rearchitected to adopt the Function as a Service (FaaS) paradigm.

Contrary to old-school applications that run all the time, consuming resources although not doing any actual work (not until an event is triggered), applications leveraging Function as a Service (FaaS) are only executed in response to events or requests, running just for a short period of time as needed for an event to be processed, and are next terminated if there are not any other events or requests pending. As it is evident, this approach can help us save a lot in terms of resource usage, effectively reducing both the operational costs and the environmental footprint of event-driven applications.

As displayed in the above diagram, an application is broken into one or more individual functions f(x), f’(x), f’’(x), … with each one of them doing a single, independent piece of work. Each function can be individually triggered by one of the following actions:

- An HTTP request,

- An event, such as a new file uploaded to cloud storage, or an existing file modified,

- A new message arriving in a queue,

- A task scheduled to run at a given time (like a cron job),

Clearly, when adopting a FaaS model, your application does not run as a whole, but individual functions are executed instead. Therefore, FaaS enables us to transition from the older paradigm of running a long-lived monolithic application to executing short-lived lightweight functions, hence embracing a fine-grained resource consumption model.

FaaS falls under the category of serverless architectures, where software engineers are allowed to focus on writing the application itself, without having to provision or manage any servers; the cloud provider handles all the underlying infrastructure that runs FaaS functions. Moreover, scaling of individual functions is automatically being handled by cloud providers, for example by running multiple instances of a function in response to a high number of incoming HTTP requests that need to be processed.

FaaS is supported by most public cloud providers, such as AWS Lambda, Azure Functions, Google Cloud Functions, IBM Cloud Functions, and Oracle Cloud Functions.

Decarbonizing your Java applications

With Java being around for more than 25 years, a lot of enterprise applications are written in Java. Given the popularity of Java, here are a few decarbonization tips that you can specifically apply to your Java applications:

Upgrade to the latest version of Java

According to the State of the Developer Ecosystem Survey 2020 conducted by JetBrains, Java ranks as the 2nd primary language used by professional developers with Java 8 being regularly used by 75% of them, despite all the newer versions released with Java 15 being the most recent one as of September 2020.

Modern versions of Java introduced several enhancements that reduce the memory footprint of Java applications:

- Since Java 9, the memory allocation for String objects has been enhanced to consume less space in the Java heap, thus resulting in footprint & performance improvements of up to 10%. More details are available in my article on how your applications can run faster with Java 9.

- Since Java 10, Java applications running on the same host can take advantage of Class-Data Sharing (CDS) to reduce their memory footprint by approximately 15%. For more information, you may refer to my article on how to reduce the cloud bill of your Java applications.

You can now seize the opportunity to reduce the costs and environmental footprint of your applications by upgrading to one of the latest versions of Java.

Use a JVM that consumes less memory

Nowadays, there are several distributions of Java available, such as AdoptOpenJDK, Amazon Coretto, and Azul Zulu. One difference that is often overlooked is the Java Virtual Machine (JVM) that each distribution of Java relies on. For example, AdoptOpenJDK provides 2 flavors of Java, with each one of them using a different Virtual Machine (VM):

- HotSpot: this is the VM that has always been shipped with Oracle JDK; it is the most widely used VM today.

- OpenJ9: a VM that was originally released with IBM JDK, claiming to be designed for low memory usage and fast startup.

As analyzed in my previous article, when running an application on OpenJ9 VM a memory footprint size of about 71% and 54% less than the same application running on HotSpot VM was measured for Java 8 and 11 respectively. Given that Amazon Coretto and Azul Zulu are essentially forks of HotSpot VM, they are expected to have similar memory footprints with AdoptOpenJDK’s HotSpot VM.

A memory lightweight VM like OpenJ9 enables you to host more applications on a given server (i.e. achieving a higher application density per server), hence requiring fewer servers to host all the Java applications that comprise your software solution.

Clearly, running your Java applications on OpenJ9 can have a lower impact on the environment (since fewer resources are consumed), while at the same time it can help you cut down on your cloud costs.

How are you going to combat climate change?

As software engineers, we can all help prevent climate change, starting with the decarbonization of our software applications, improving them to consume fewer resources, as analyzed in this article.

What will be your first steps in this journey towards the decarbonization of your software applications?